During our recent AWS research I observed again how their billing seems to lack clarity and granularity. That is, it’s very difficult to figure out in detail what’s going on and where particular charges actually come from. If you have a large server farm at AWS, that’s going to be a problem. Unless of course you just pay whatever and don’t mind what’s on the bill?

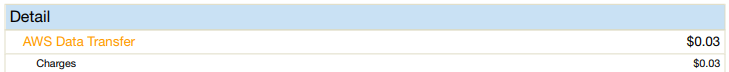

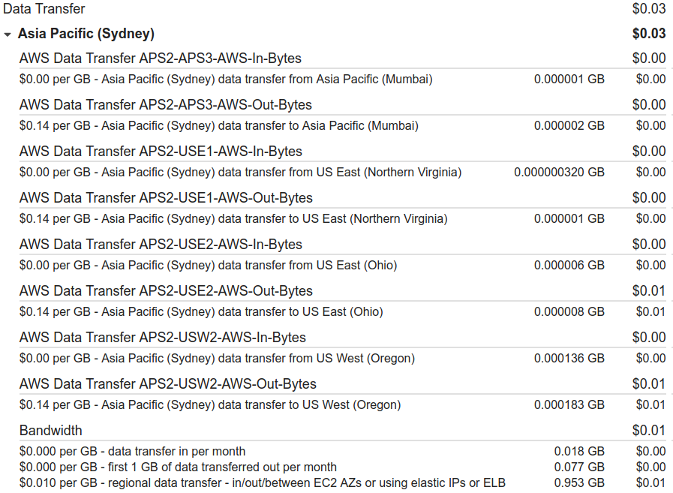

Of particular interest was a charge for inter-AZ (DC) traffic. Not because it was large, it was just a few cents. But it was odd. So this would be outbound traffic from our short-lived test server(s) to another AWS availability zone (datacenter) in the US. This gets charged at a couple of cents per GB.

I had a yarn with billing support, and they noted that the AWS-side of the firewall had some things open and because they can’t see what goes on inside a server, they regarded it valid traffic. The only service active on the server was SSH, and it keeps its own logs. While doing the Aurora testing we weren’t specifically looking at this, so by the time the billing info showed up (over a day later), the server had been decommissioned already and along with it those logs.

As a sidenote, presuming this was “valid” traffic, someone was using AWS servers to scan other AWS servers and try and gain access. I figured such activity clearly in breach of AWS policies would be of interest to AWS, but it wasn’t. Seems a bit neglectful to me. And with all the tech, shouldn’t their systems be able to spot such activities even automatically?

Some days later I fired up another server specifically to investigate the potential for rogue outbound traffic. I again left SSH accessible to the world, to emulate the potential of being accessed from elsewhere, while keeping an eye on the log. This test server only existed for a number of hours, and was fully monitored internally so we know exactly what went on. Obviously, we had to leave the AWS-side firewall open to be able to perform the test. Over hours there were a few login attempts, but nothing major. There would have to be many thousands of login attempts to create a GB of outbound traffic – consider that there’s no SSH connection actually getting established, the attempts don’t get beyond the authentication stage so it’s just some handshake and the rejection going out. So no such traffic was seen in this instance.

Some days later I fired up another server specifically to investigate the potential for rogue outbound traffic. I again left SSH accessible to the world, to emulate the potential of being accessed from elsewhere, while keeping an eye on the log. This test server only existed for a number of hours, and was fully monitored internally so we know exactly what went on. Obviously, we had to leave the AWS-side firewall open to be able to perform the test. Over hours there were a few login attempts, but nothing major. There would have to be many thousands of login attempts to create a GB of outbound traffic – consider that there’s no SSH connection actually getting established, the attempts don’t get beyond the authentication stage so it’s just some handshake and the rejection going out. So no such traffic was seen in this instance.

Of course, the presumed earlier SSH attempts may have just been the result of a scanning server getting lucky, whereas my later test server didn’t “get lucky” being scanned. It’s possible. To increase the possible attack surface, we put an nc (netcat) listener on ports 80, 443 and some others, just logging any connection attempt without returning outbound traffic. This again saw one or two attempts, but no major flood.

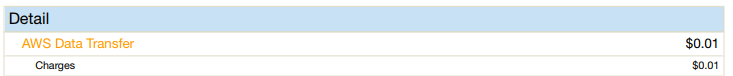

I figured that was the end of it, and shut down the server. But come billing time, we once again see a cent-charge for similar traffic.

And this time we know it definitely didn’t exist, because we were specifically monitoring for it. So, we know for a fact that we were getting billed for traffic that didn’t happen. “How quaint”. Naturally, because the AWS-side firewall was specifically left open, AWS billing doesn’t want to hear. I suppose we could re-run the test again, this time with a fully set up AWS-side firewall, but it’s starting to chew up too much time.

My issues are these:

For a high tech company, AWS billing is remarkably obtuse. That’s a business choice, and not to the clients’ benefit. Some years ago I asked my accountant whether my telco bill (Telstra at the time) was so convoluted for any particular accounting reason I wasn’t aware of, and her answer was simply “no”. This is the same. The company chooses to make their bills difficult to read and make sense of.

For a high tech company, AWS billing is remarkably obtuse. That’s a business choice, and not to the clients’ benefit. Some years ago I asked my accountant whether my telco bill (Telstra at the time) was so convoluted for any particular accounting reason I wasn’t aware of, and her answer was simply “no”. This is the same. The company chooses to make their bills difficult to read and make sense of.- Internal AWS network monitoring must be inadequate, if AWS hosted servers can do sweep scans and mass SSH login attempts. Those are patterns that can be caught. That is, presuming, that those scans and attempts actually happened. If they didn’t, the earlier traffic didn’t exist either, in which case we’re getting billed for stuff that didn’t happen. It’s one or the other, right? (Based on the above observations, my bet is actually on the billing rather than the internal network monitoring – AWS employs very smart techs.)

- Us getting billed an extra cent on a bill doesn’t break the bank, but since it’s for something that we know didn’t happen, it’s annoying. It makes us wonder what else is wrong in the billing system, and whether other people too might get charged a cent extra here or there. Does this matter? Yes it does, because on the AWS end it adds up to many millions. And for bigger AWS clients, it will add up over time also.

Does anybody care? I don’t know. Do you care?

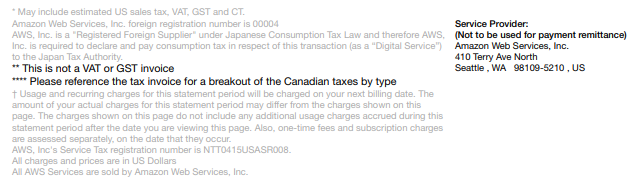

Oh, and while Google (AdWords, etc), Microsoft and others have over the last few years adjusted their invoicing in Australia to produce an appropriate tax invoice with GST (sales tax) even though the billing is done from elsewhere (Singapore in Google’s case), AWS doesn’t do this. Australian server instances are all sold from AWS Inc. in the Seattle, WA.